Running Vitis AI with the Kria Starter Kit

Try Using Custom Models

Release date: Feb 22, 2023

Vitis-AI provides the ability to compile trained AI models from TensorFlow and other sources for the board.

In this article, we will actually create an AI model, train it, and compile it for KV260 to get it running.

MNIST (image classification of handwritten numbers), which is often used in AI learning implementations, will be used to illustrate this.

Once the AI model is compiled, we will confirm that it can be run on the KV260 in the same flow as Try Using Model Zoo.

Table of Contents

MNIST Overview

MNIST is an image classification of handwritten numbers 0-9.

The easy availability of datasets in TensorFlow makes it ideal for learning how to create AI models, store and use learned AI models, etc.

Overview of board-oriented compilation methods

The following is an overview of the compilation sequence.

- Pre-trained AI models are available

- Quantize AI model

- Compile for board

2,3 functionality is provided by Vitis-AI, which can be compiled and run for your board if you have a pre-trained AI model.

Note that quantization changes the accuracy of AI inference, so the AI inference results before and after quantization will not be exactly the same.

Note that 3. must be compiled according to the board's DPU architecture.

MNIST training and quantization with TensorFlow2

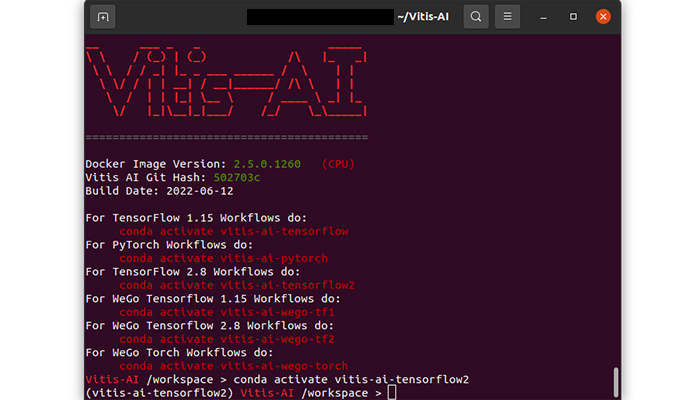

Start the Vitis-AI development environment and enter conda activate vitis-ai-tensorflow2 to activate the TensorFlow2 environment.

The Vitis-AI development environment is not mandatory when training AI models, but since the TensorFlow2 environment is available, this article will also train AI models in the Vitis-AI development environment.

Vitis-AI Development Environment

Create and move the working directory.

Creating a working directory

The process involves creating an AI model, training MNIST, and quantizing and storing the model.

Save the following as my_mnist_test.py and run it as python my_mnist_test.py.

See comments in the code for contents.

Please note that the content and training parameters of the AI model layer are not deeply considered.

We also fix random numbers so that the results do not change each time.

import tensorflow as tf

from tensorflow.keras.models import load_model

from tensorflow_model_optimization.quantization.keras import vitis_quantize

# Random number fixed

tf.random.set_seed(0)

# Prepare dataset for learning and testing

# This function can handle the dataset needed to train MNIST

(x_train, y_train),(x_test, y_test) = tf.keras.datasets.mnist.load_data()

# Normalize image data for training

x_train = x_train/255.0

x_test = x_test/255.0

# AI model layer creation

layers_list = []

# Convert to one-dimensional array

layers_list.append(tf.keras.layers.Flatten(input_shape=(28, 28, 1)))

# all binding layers (128), activation specifies ReLu

layers_list.append(tf.keras.layers.Dense(128, activation='relu'))

# Dropout(0.2)

layers_list.append(tf.keras.layers.Dropout(0.2))

# all coupling layers (10), activation specifies softmax, where is the final output

layers_list.append(tf.keras.layers.Dense(10, activation='softmax'))

# AI Model Setup

mnist_model = tf.keras.models.Sequential(layers_list)

# AI model training setup

mnist_model.compile(optimizer='adam', loss='sparse_categorical_crossentropy', metrics=['accuracy'])

# AI model training run

mnist_model.fit(x_train, y_train, batch_size=128, epochs=30, validation_data=(x_test, y_test))

# Quantize and store AI models

quantizer = vitis_quantize.VitisQuantizer(mnist_model)

# Number of data sets to be passed is 100-1000 without labels, so let's say 500.

quantized_model = quantizer.quantize_model(calib_dataset=x_train[0:500])

quantized_model.save("quantized_model.h5")

Compilation for KV260

AI training and quantized AI models are then compiled for the target board in the Vitis-AI environment.

First, create an arch.json to specify the DPU architecture with B4096.

In this article, B4096 (fingerprint:0x101000016010407) is specified, so save the following as arch.json.

{

"target": "DPUCZDX8G_ISA1_B4096"

}Compilation is done using the vai_c_tensorflow2 command.

Save the following as my_mnist_compile.sh and run it in your working directory

#!/bin/bash

ARCH=./arch.json

COMPILE_KV260=./outputs_kv260

vai_c_tensorflow2 \

--model ./quantized_model.h5 \

--arch ${ARCH} \

--output_dir ${COMPILE_KV260} \

--net_name my_mnist_test

| argument (e.g. function, program, programme) | meaning |

|---|---|

| model | Set quantized model |

| arch | Specify DPU architecture (must match the board's DPU architecture) |

| output_dir | output directory specification |

| net_name | AI Model Name |

The output my_mnist_test.xmodel is the compiled AI model.

Download the files used in this article

Download Jupyter Notebook, test images for AI inference with KV260.

Please download the file below.

The contents are as follows

| file-name | Description. |

|---|---|

| CustomModel_test.ipynb | Jupter Notebook file to perform AI inference |

| my_mnist_test.jpg | test image |

Deployment of AI inference files

Place the three files obtained in Section 3.1 on the microSD card.

| file-name | Description. |

|---|---|

| my_mnist_test.xmodel | Trained and compiled AI models |

| CustomModel_test.ipynb | Jupter Notebook file to perform AI inference |

| my_mnist_test.jpg | test image |

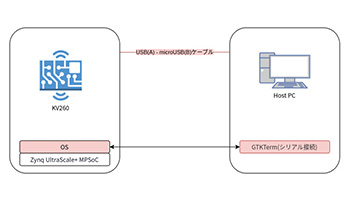

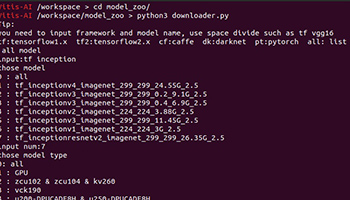

See Try Using Model Zoo for placement instructions.

Verify operation of compiled AI model

Using a compiled AI model is the same as Try Using Model Zoo.

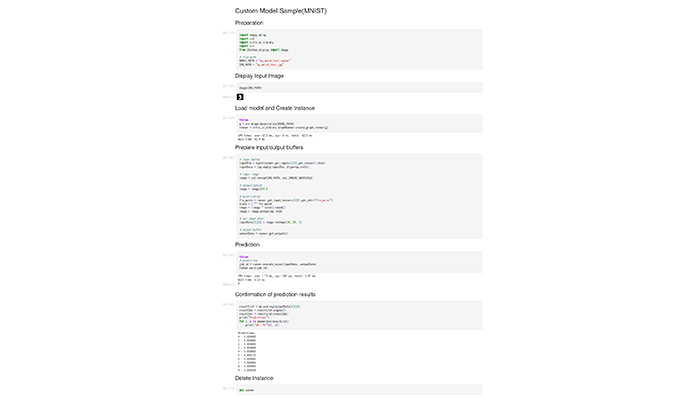

Select CustomModel_test.ipynb in Jupyter Notebook and run each cell.

If it is confirmed that no error occurs during AI inference, it is OK.

The execution result is shown in the following image.

AI inference is performed using the test image of the number 3 as input, and it can be confirmed that the correct decision can be made.

Example of AI inference execution (click to open directly)

summary

We created an AI model, compiled it for the board, and ran it on the KV260.

We have confirmed that users can run their own AI models on the KV260.

However, not all operators used are supported, so quantization, compilation may fail or performance may not be sufficient for some AI models.

See Support Information for details.

* All names, company names, product names, etc. mentioned herein are trademarks or registered trademarks of their respective companies.